In addition to using texture coordinates that are built into the

model, it is also possible to generate texture coordinates at runtime.

Usually you would use this technique to achieve some particular

effect, such as projective texturing or environment mapping, but

sometimes you may simply want to apply a texture to a model that does

not already have texture coordinates, and this is the only way to do

that.

The texture coordinates generated by this technique are generated

on-the-fly, and are not stored within the model. When you turn off

the generation mode, the texture coordinates cease to exist.

Use the following NodePath method to enable automatic generation of

texture coordinates:

nodePath.setTexGen(TextureStage, texGenMode)

|

The texGenMode parameter specifies how the texture coordinates are to

be computed, and may be any of the following options. In the list

below, "eye" means the coordinate space of the observing camera, and

"world" means world coordinates, e.g. the coordinate space of render,

the root of the scene graph.

TexGenAttrib.MWorldPosition |

Copies the (x, y, z) position of each vertex, in world space, to the (u, v, w) texture coordinates. |

TexGenAttrib.MEyePosition |

Copies the (x, y, z) position of each vertex, in camera space, to the (u, v, w) texture coordinates. |

TexGenAttrib.MWorldNormal |

Copies the (x, y, z) lighting normal of each vertex, in world space, to the (u, v, w) texture coordinates. |

TexGenAttrib.MEyeNormal |

Copies the (x, y, z) lighting normal of each vertex, in camera space, to the (u, v, w) texture coordinates. |

TexGenAttrib.MEyeSphereMap |

Generates (u, v) texture coordinates based on the lighting normal and the view vector to apply a standard reflection sphere map. |

TexGenAttrib.MEyeCubeMap |

Generates (u, v, w) texture coordinates based on the lighting normal and the view vector to apply a standard reflection cube map. |

TexGenAttrib.MWorldCubeMap |

Generates (u, v, w) texture coordinates based on the lighting normal and the view vector to apply a standard reflection cube map. |

TexGenAttrib.MPointSprite |

Generates (u, v) texture coordinates in the range (0, 0) to (1, 1) for large points so that the full texture covers the square. This is a special mode that should only be applied when you are rendering sprites, special point geometry that are rendered as squares. It doesn't make sense to apply this mode to any other kind of geometry. Normally you wouldn't set this mode directly; let the SpriteParticleRenderer do it for you. |

TexGenAttrib.MLightVector |

Generates special (u, v, w) texture coordinates that represent the vector from each vertex to a particular Light in the scene graph, in each vertex's tangent space. This is used to implement normal maps. This mode requires that each vertex have a tangent and a binormal computed for it ahead of time; you also must specify the NodePath that represents the direction of the light. Normally, you wouldn't set this mode directly either; use NodePath.setNormalMap(), or implement normal maps using programmable shaders. |

Note that several of the above options generate 3-D texture

coordinates: (u, v, w) instead of just (u, v). The third coordinate

may be important if you have a 3-D texture or a cube map (described

later), but if you just have an ordinary 2-D texture the extra

coordinate is ignored. (However, even with a 2-D texture, you might

apply a 3-D transform to the texture coordinates, which would bring

the third coordinate back into the equation.)

Also, note that almost all of these options have a very narrow purpose;

you would generally use most of these only to perform the particular

effect that they were designed for. This manual will discuss these

special-purpose TexGen modes in later sections, as each effect is

discussed; for now, you only need to understand that they exist, and

not worry about exactly what they do.

The mode that is most likely to have general utility is the first one:

MWorldPosition. This mode converts each vertex's (x, y, z) position

into world space, and then copies those three numeric values to the

(u, v, w) texture coordinates. This means, for instance, that if you

apply a normal 2-D texture to the object, the object's (x, y) position

will be used to look up colors in the texture.

For instance, the teapot.egg sample model that ships with Panda has no

texture coordinates built in the model, so you cannot normally apply a

texture to it. But you can enable automatic generation of texture

coordinates and then apply a texture:

teapot = loader.loadModel('teapot.egg')

tex = loader.loadTexture('maps/color-grid.rgb')

teapot.setTexGen(TextureStage.getDefault(), TexGenAttrib.MWorldPosition)

teapot.setTexture(tex)

|

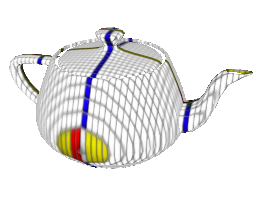

And you end up with something like this:

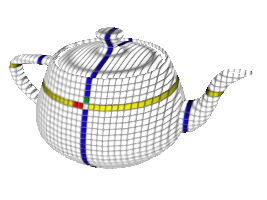

You can use this in conjunction with a texture transform to further

manipulate the texture coordinates. For instance, to rotate the

texture 90 degrees, you could do something like this:

teapot.setTexTransform(TextureStage.getDefault(), TransformState.makeHpr(VBase3(0, 90, 0)))

|

Finally, consider that the only two choices for the coordinate frame

of the texture coordinate generation are "world" and "eye", for the

root NodePath and the camera NodePath, respectively. But what if you

want to generate the texture coordinates relative to some other node,

say the teapot itself? The above images are all well and good for a

teapot that happens to be situated at the origin, but suppose we want

the teapot to remain the same when we move it somewhere else in the

world?

If you use only MWorldPosition, then when you change the teapot's

position, for instance by parenting it to a moving node, the teapot

will seem to move while its texture pattern stays in place--maybe not

the effect you had in mind. What you probably intended was for the

teapot to take its texture pattern along with it as it moves around.

To do this, you will need to compute the texture coordinates in the

space of the teapot node, rather than in world space.

Panda3D provides the capability to generate texture coordinates in the

coordinate space of any arbitrary node you like. To do this, use

MWorldPosition in conjunction with Panda's "texture projector", which

applies the relative transform between any two arbitrary NodePaths to

the texture transform; you can use it to compute the relative

transform from world space to teapot space, like this:

teapot.setTexGen(TextureStage.getDefault(), TexGenAttrib.MWorldPosition)

teapot.setTexProjector(TextureStage.getDefault(), render, teapot);

|

It may seem a little circuitous to convert the teapot vertices to world space to generate the texture coordinates, and then convert the texture coordinates back to teapot space again--after all, didn't they start out in teapot space? It would have saved a lot of effort just to keep them there! Why doesn't Panda just provide an MObjectPosition mode that would convert texture coordinates from the object's native position?

That's a fair question, and MObjectPosition would be a fine idea for a model as simple as the teapot, which is after all just one node. But for more sophisticated models, which can contain multiple sub-nodes each with their own coordinate space, the idea of MObjectPosition is less useful, unless you truly wanted each sub-node to be re-textured within its own coordinate space. Rather than provide this feature of questionable value, Panda3D prefers to give you the ability to specify the particular coordinate space you had in mind, unambiguously.

Note that you only want to call setTexProjector() when you are using mode MWorldPosition. The other modes are generally computed from vectors (for instance, normals), not positions, and it usually doesn't makes sense to apply a relative transform to a vector.

|